publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2026

- Preprint

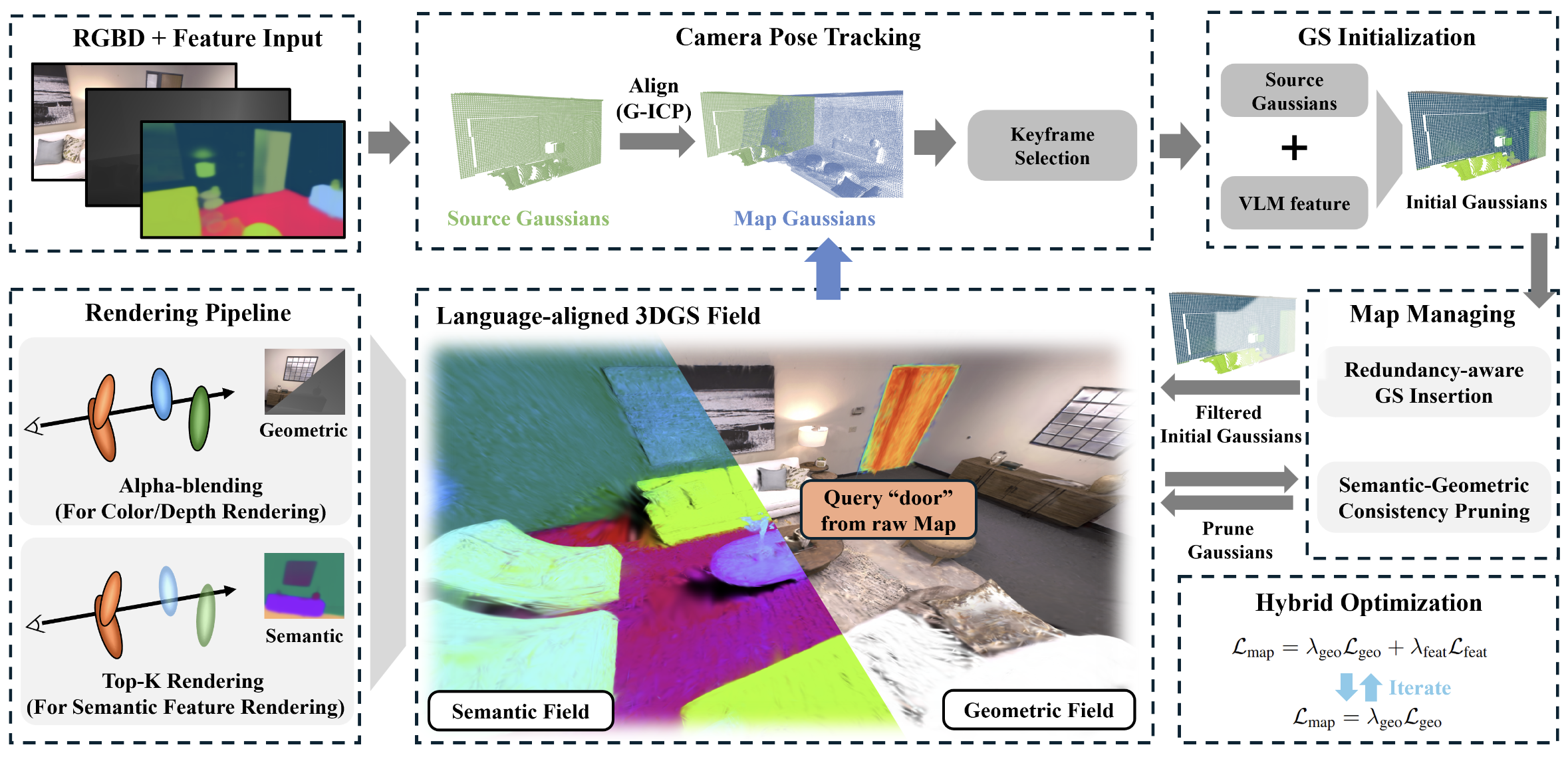

LangGS-SLAM: Real-Time Language-Feature Gaussian Splatting SLAMSeongbo Ha, Sibaek Lee, Kyungsu Kang, Joonyeol Choi, Seungjun Tak, and Hyeonwoo YuarXiv preprint arXiv:2602.06991, 2026slam 3D Perception Scene Representation

LangGS-SLAM: Real-Time Language-Feature Gaussian Splatting SLAMSeongbo Ha, Sibaek Lee, Kyungsu Kang, Joonyeol Choi, Seungjun Tak, and Hyeonwoo YuarXiv preprint arXiv:2602.06991, 2026slam 3D Perception Scene RepresentationIn this paper, we propose a RGBD SLAM system that reconstructs a language-aligned dense feature field while sustaining low-latency tracking and mapping. First, we introduce a Top-K Rendering pipeline, a high-throughput and semantic-distortion-free method for efficiently rendering high-dimensional feature maps. To address the resulting semantic–geometric discrepancy and mitigate the memory consumption, we further design a multi-criteria map management strategy that prunes redundant or inconsistent Gaussians while preserving scene integrity. Finally, a hybrid field optimization framework jointly refines the geometric and semantic fields under real-time constraints by decoupling their optimization frequencies according to field characteristics. The proposed system achieves superior geometric fidelity compared to geometric-only baselines and comparable semantic fidelity to offline approaches while operating at 15 FPS. Our results demonstrate that online SLAM with dense, uncompressed language-aligned feature fields is both feasible and effective, bridging the gap between 3D perception and language-based reasoning.

2025

- Preprint

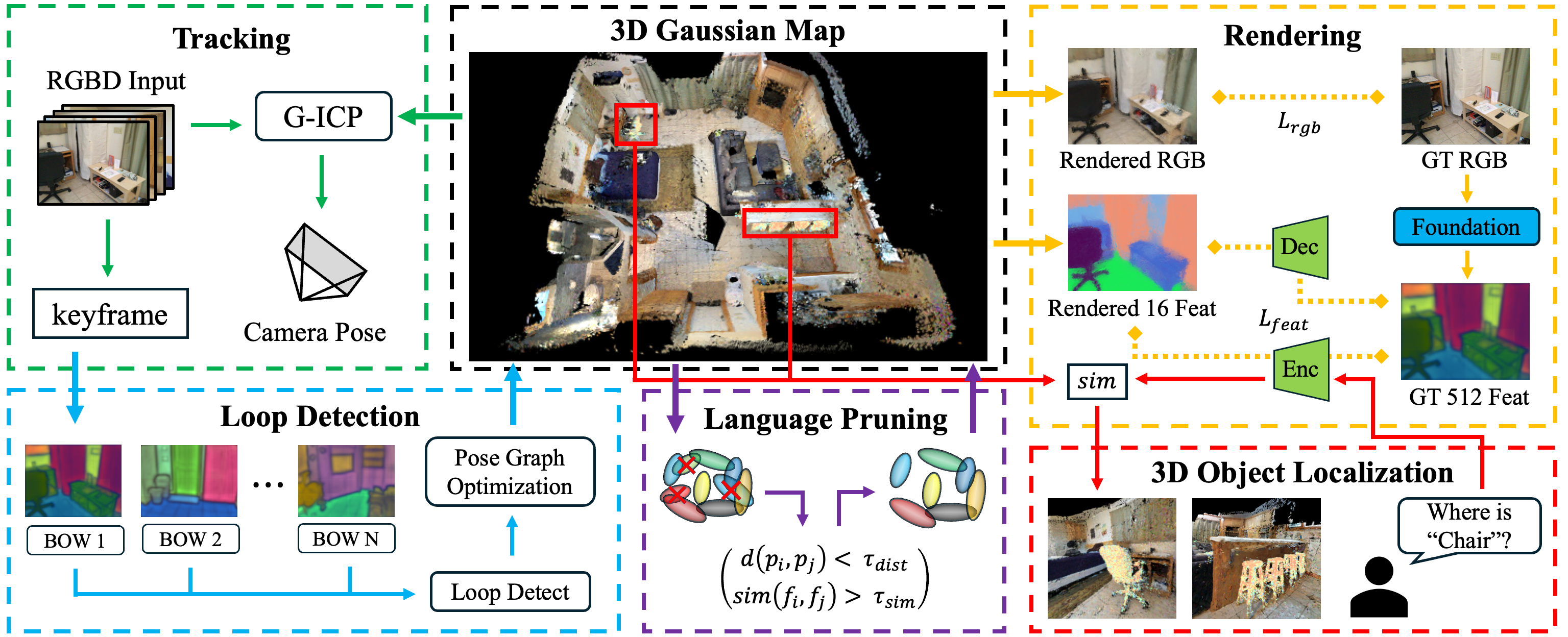

LEGO-SLAM: Language-Embedded Gaussian Optimization SLAMSibaek Lee, Seongbo Ha, Kyeongsu Kang, Joonyeol Choi, Seungjun Tak, and Hyeonwoo YuarXiv preprint arXiv:2511.16144, 2025slam 3D Perception loop closing

LEGO-SLAM: Language-Embedded Gaussian Optimization SLAMSibaek Lee, Seongbo Ha, Kyeongsu Kang, Joonyeol Choi, Seungjun Tak, and Hyeonwoo YuarXiv preprint arXiv:2511.16144, 2025slam 3D Perception loop closingRecent advances in 3D Gaussian Splatting (3DGS) have enabled Simultaneous Localization and Mapping (SLAM) systems to build photorealistic maps. However, these maps lack the open-vocabulary semantic understanding required for advanced robotic interaction. Integrating language features into SLAM remains a significant challenge, as storing high-dimensional features demands excessive memory and rendering overhead, while existing methods with static models lack adaptability for novel environments. To address these limitations, we propose LEGO-SLAM (Language-Embedded Gaussian Optimization SLAM), the first framework to achieve real-time, open-vocabulary mapping within a 3DGS-based SLAM system. At the core of our method is a scene-adaptive encoder-decoder that distills high-dimensional language embeddings into a compact 16-dimensional feature space. This design reduces the memory per Gaussian and accelerates rendering, enabling real-time performance. Unlike static approaches, our encoder adapts online to unseen scenes. These compact features also enable a language-guided pruning strategy that identifies semantic redundancy, reducing the map’s Gaussian count by over 60% while maintaining rendering quality. Furthermore, we introduce a language-based loop detection approach that reuses these mapping features, eliminating the need for a separate detection model. Extensive experiments demonstrate that LEGO-SLAM achieves competitive mapping quality and tracking accuracy, all while providing open-vocabulary capabilities at 15 FPS.

- RA-L

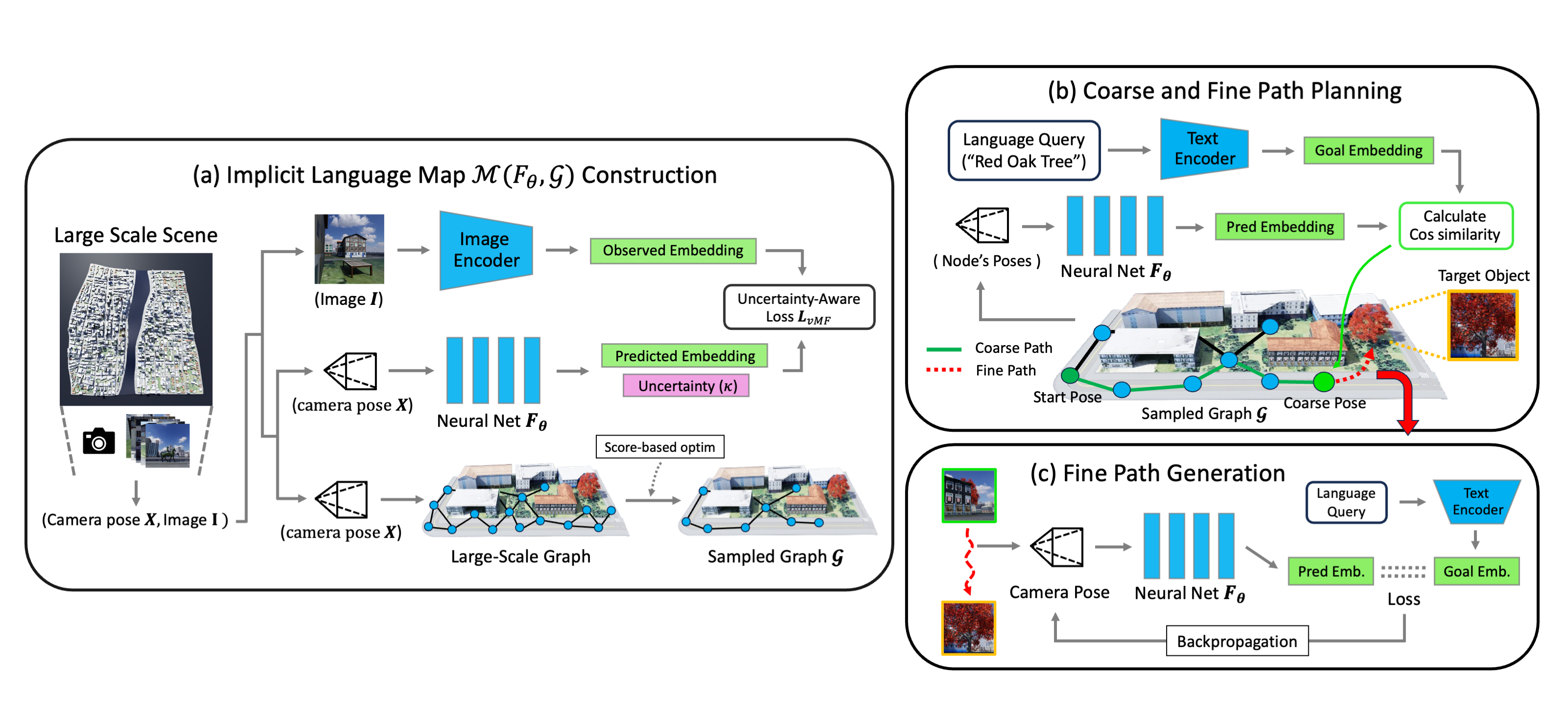

LAMP: Implicit Language Map for Robot NavigationSibaek Lee, Hyeonwoo Yu, Giseop Kim, and Sunwook ChoiIEEE Robotics and Automation Letters (RA-L), 2025Vision Language Model Robot Navigation Scene Representation Embedded Systems

LAMP: Implicit Language Map for Robot NavigationSibaek Lee, Hyeonwoo Yu, Giseop Kim, and Sunwook ChoiIEEE Robotics and Automation Letters (RA-L), 2025Vision Language Model Robot Navigation Scene Representation Embedded SystemsRecent advances in vision-language models have made zero-shot navigation feasible, enabling robots to interpret and follow natural language instructions without requiring labeling. However, existing methods that explicitly store language vectors in grid or node-based maps struggle to scale to large environments due to excessive memory requirements and limited resolution for fine-grained planning. We introduce LAMP (Language Map), a novel neural language field-based navigation framework that learns a continuous, language-driven map and directly leverages it for fine-grained path generation. Unlike prior approaches, our method encodes language features as an implicit neural field rather than storing them explicitly at every location. By combining this implicit representation with a sparse graph, LAMP supports efficient coarse path planning and then performs gradient-based optimization in the learned field to refine poses near the goal. Our two-stage pipeline of coarse graph search followed by language-driven, gradient-guided optimization is the first application of an implicit language map for precise path generation. This refinement is particularly effective at selecting goal regions not directly observed by leveraging semantic similarities in the learned feature space. To further enhance robustness, we adopt a Bayesian framework that models embedding uncertainty via the von Mises–Fisher distribution, thereby improving generalization to unobserved regions. To scale to large environments, LAMP employs a graph sampling strategy that prioritizes spatial coverage and embedding confidence, retaining only the most informative nodes and substantially reducing computational overhead. Our experimental results, both in NVIDIA Isaac Sim and on a real multi-floor building, demonstrate that LAMP outperforms existing explicit methods in both memory efficiency and fine-grained goal-reaching accuracy, opening new possibilities for scalable, language-driven robot navigation.

- Preprint

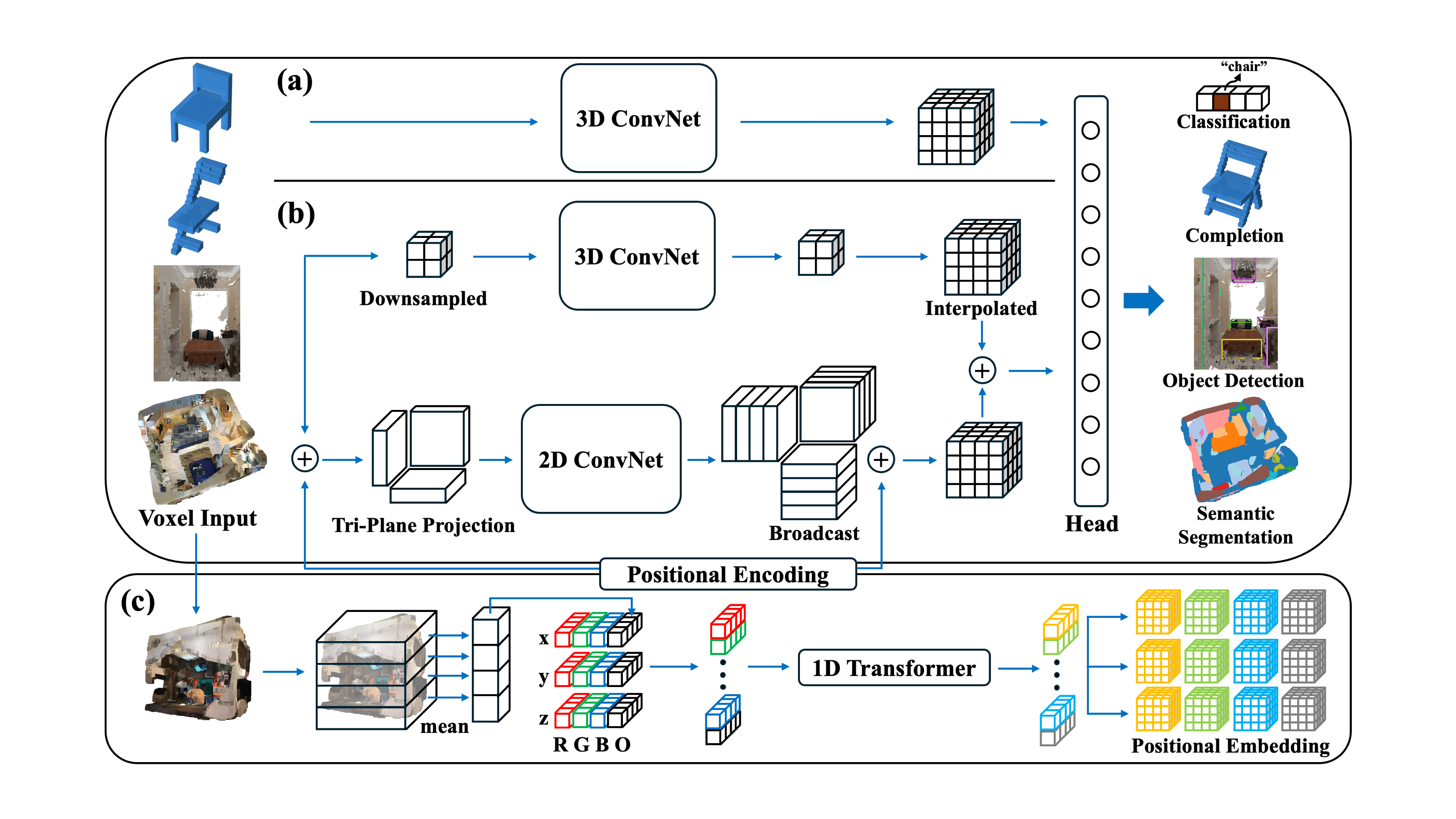

Efficient 3D Perception on Embedded Systems via Interpolation-Free Tri-Plane Lifting and Volume FusionSibaek Lee, Jiung Yeon, and Hyeonwoo YuarXiv preprint arXiv:2509.14641, 20253D Perception Embedded Systems

Efficient 3D Perception on Embedded Systems via Interpolation-Free Tri-Plane Lifting and Volume FusionSibaek Lee, Jiung Yeon, and Hyeonwoo YuarXiv preprint arXiv:2509.14641, 20253D Perception Embedded SystemsDense 3D convolutions provide high accuracy for perception but are too computationally expensive for real-time robotic systems. Existing tri-plane methods rely on 2D image features with interpolation, point-wise queries, and implicit MLPs, which makes them computationally heavy and unsuitable for embedded 3D inference. As an alternative, we propose a novel interpolation-free tri-plane lifting and volumetric fusion framework, that directly projects 3D voxels into plane features and reconstructs a feature volume through broadcast and summation. This shifts nonlinearity to 2D convolutions, reducing complexity while remaining fully parallelizable. To capture global context, we add a low-resolution volumetric branch fused with the lifted features through a lightweight integration layer, yielding a design that is both efficient and end-to-end GPU–accelerated. To validate the effectiveness of the proposed method, we conduct experiments on classification, completion, segmentation, and detection, and we map the trade-off between efficiency and accuracy across tasks. Results show that classification and completion retain or improve accuracy, while segmentation and detection trade modest drops in accuracy for significant computational savings. On-device benchmarks on an NVIDIA Jetson Orin nano confirm robust real-time throughput, demonstrating the suitability of the approach for embedded robotic perception.

- RA-L

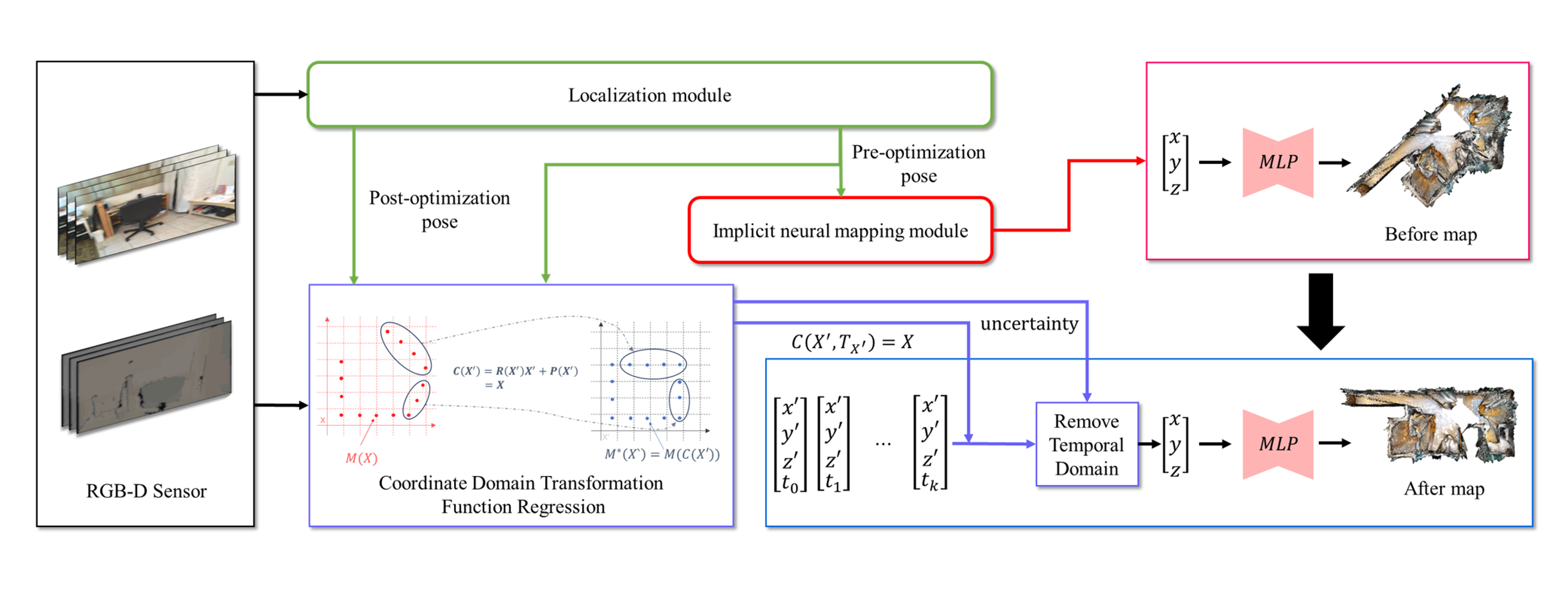

Spatial Coordinate Transformation for 3D Neural Implicit MappingKyeongsu Kang, Seongbo Ha, Sibaek Lee, and Hyeonwoo YuIEEE Robotics and Automation Letters, 2025NeRF 3D Representation Gaussian Process

Spatial Coordinate Transformation for 3D Neural Implicit MappingKyeongsu Kang, Seongbo Ha, Sibaek Lee, and Hyeonwoo YuIEEE Robotics and Automation Letters, 2025NeRF 3D Representation Gaussian ProcessImplicit Neural Representation (INR)-based SLAM has a critical issue where all keyframes must be stored in memory for post-training whenever a remapping is needed due to the neural network’s weights themselves representing the map. To address this, previous INR-based SLAM proposed methods to modify INR-based maps without changing the neural network’s weights. However, these approaches suffer from low memory efficiency and increased space complexity. In this paper, we introduce a remapping method for INR-based maps that does not require post-training the neural network’s weights and needed low space cost. The problem of function modification, such as updating a map defined as a neural network function, can be viewed as transforming the function’s domain. Leveraging function domain transformation, we propose a method to update INR-based maps by identifying the transformation function between the post-optimization and pre-optimization domains. Additionally, to prevent cases where the transformation between the post-optimization and pre-optimization domains does not form a one-to-many relationship, we introduce a temporal domain and propose a method to find the spatial coordinate transformation function accordingly. Evaluations in INR-based techniques demonstrate that our proposed method effectively update to maps while requiring significantly less memory compared to existing remapping approaches.

- RA-L

Bayesian NeRF: Quantifying Uncertainty With Volume Density for Neural Implicit FieldsSibaek Lee, Kyeongsu Kang, Seongbo Ha, and Hyeonwoo YuIEEE Robotics and Automation Letters, 2025NeRF 3D Representation Bayesian Learning

Bayesian NeRF: Quantifying Uncertainty With Volume Density for Neural Implicit FieldsSibaek Lee, Kyeongsu Kang, Seongbo Ha, and Hyeonwoo YuIEEE Robotics and Automation Letters, 2025NeRF 3D Representation Bayesian LearningWe present a Bayesian Neural Radiance Field (NeRF), which explicitly quantifies uncertainty in the volume density by modeling uncertainty in the occupancy, without the need for additional networks, making it particularly suited for challenging observations and uncontrolled image environments. NeRF diverges from traditional geometric methods by providing an enriched scene representation, rendering color and density in 3D space from various viewpoints. However, NeRF encounters limitations in addressing uncertainties solely through geometric structure information, leading to inaccuracies when interpreting scenes with insufficient real-world observations. While previous efforts have relied on auxiliary networks, we propose a series of formulation extensions to NeRF that manage uncertainties in density, both color and density, and occupancy, all without the need for additional networks. In experiments, we show that our method significantly enhances performance on RGB and depth images in the comprehensive dataset. Given that uncertainty modeling aligns well with the inherently uncertain environments of Simultaneous Localization and Mapping (SLAM), we applied our approach to SLAM systems and observed notable improvements in mapping and tracking performance. These results confirm the effectiveness of our Bayesian NeRF approach in quantifying uncertainty based on geometric structure, making it a robust solution for challenging real-world scenarios.

2024

- IROS

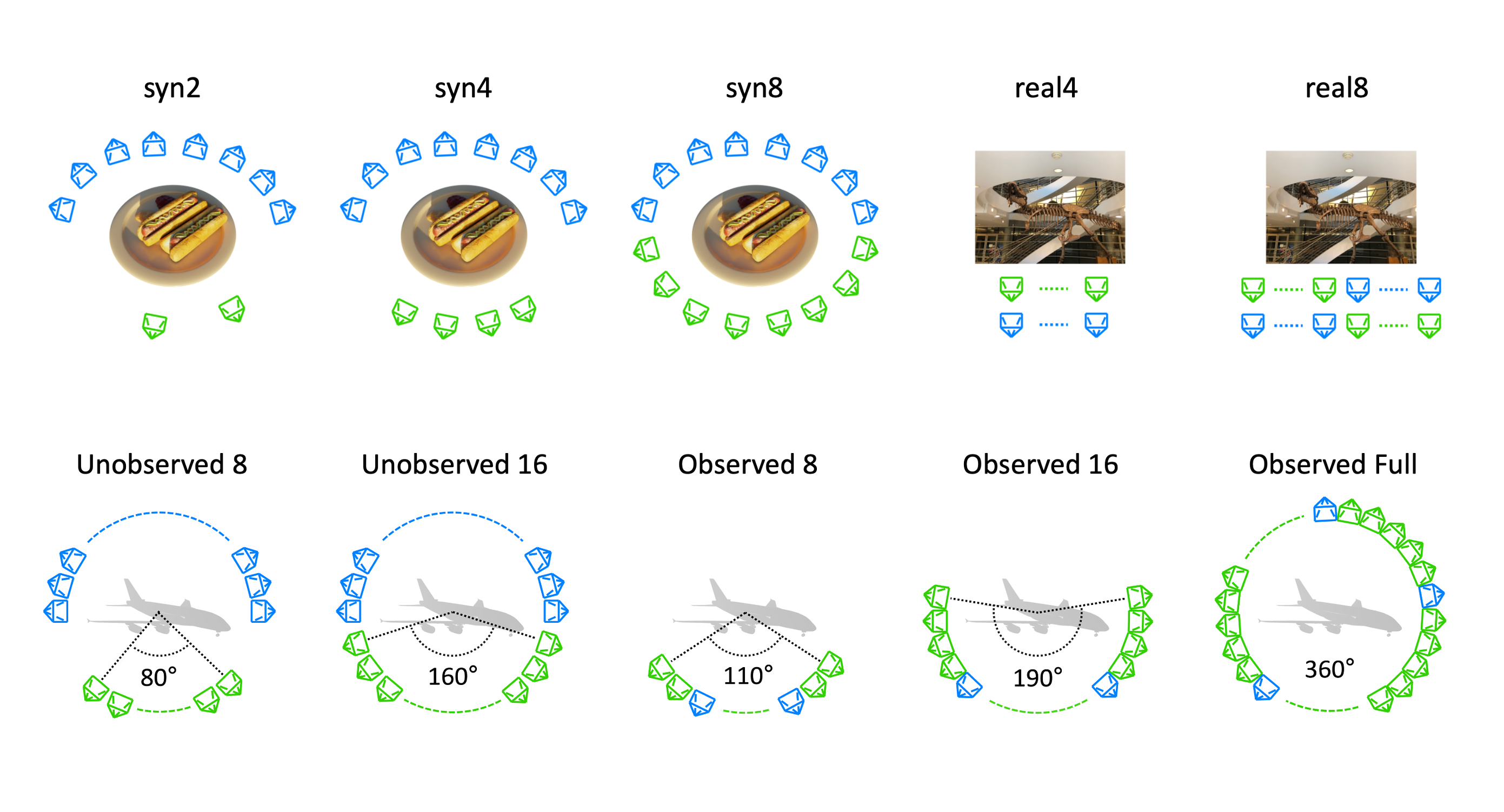

Just flip: Flipped observation generation and optimization for neural radiance fields to cover unobserved viewSibaek Lee, Kyeongsu Kang, and Hyeonwoo YuIn 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024NeRF 3D Representation Bayesian Learning

Just flip: Flipped observation generation and optimization for neural radiance fields to cover unobserved viewSibaek Lee, Kyeongsu Kang, and Hyeonwoo YuIn 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024NeRF 3D Representation Bayesian LearningWith the advent of Neural Radiance Field (NeRF), representing 3D scenes through multiple observations has shown significant improvements. Since this cutting-edge technique can obtain high-resolution renderings by interpolating dense 3D environments, various approaches have been proposed to apply NeRF for the spatial understanding of robot perception. However, previous works are challenging to represent unobserved scenes or views on the unexplored robot trajectory, as these works do not take into account 3D reconstruction without observation information. To overcome this problem, we propose a method to generate flipped observation in order to cover absent observation for unexplored robot trajectory. Our approach involves a data augmentation technique for 3D reconstruction using NeRF, by flipping observed images and estimating the 6DOF poses of the flipped cameras. Furthermore, to ensure the NeRF model operates robustly in general scenarios, we also propose a training method that adjusts the flipped pose and considers the uncertainty in flipped images accordingly. Our technique does not utilize an additional network, making it simple and fast, thus ensuring its suitability for robotic applications where real-time performance is crucial.

2023

- Preprint

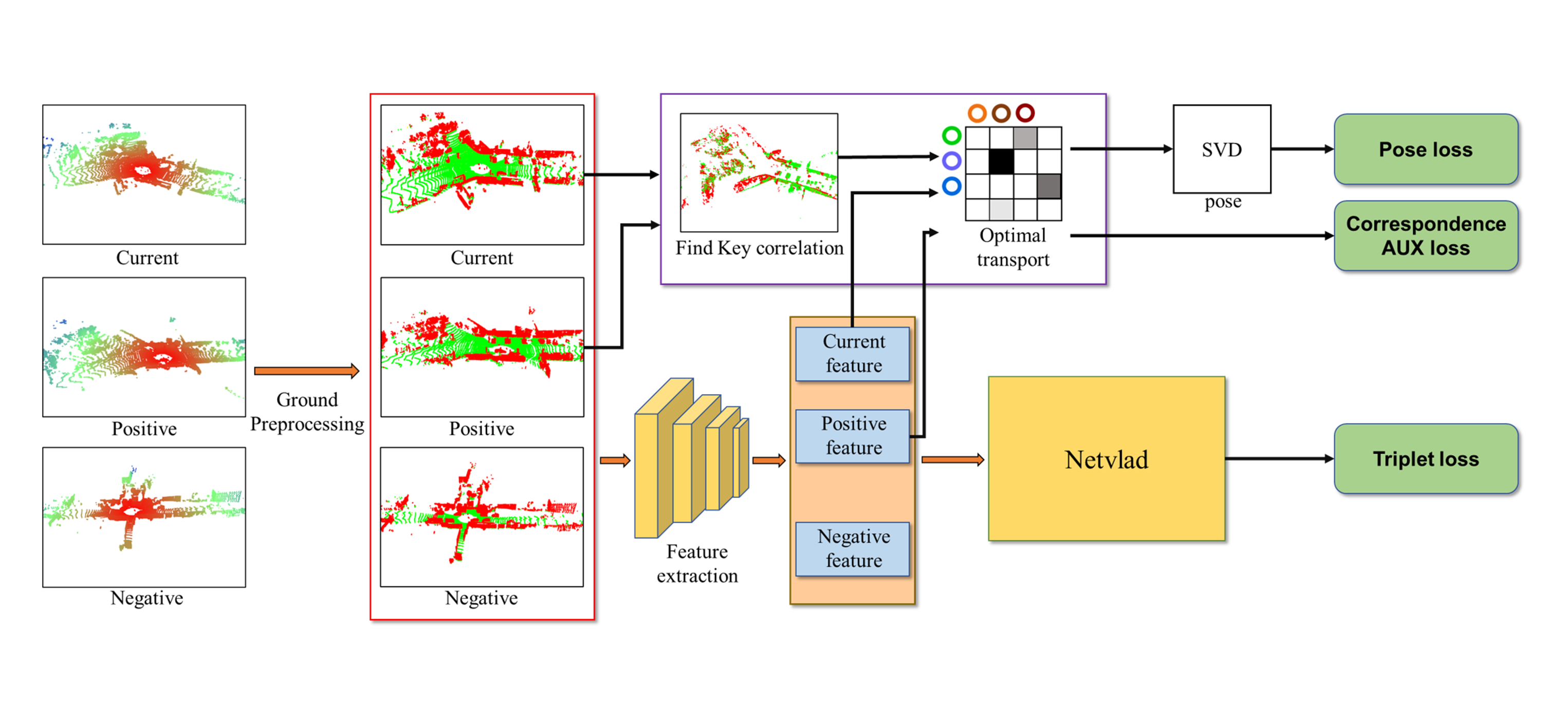

Necessity feature correspondence estimation for large-scale global place recognition and relocalizationKyeongsu Kang, Minjae Lee, and Hyeonwoo YuarXiv preprint arXiv:2303.06308, 2023Loop Closing Place Recognition SLAM

Necessity feature correspondence estimation for large-scale global place recognition and relocalizationKyeongsu Kang, Minjae Lee, and Hyeonwoo YuarXiv preprint arXiv:2303.06308, 2023Loop Closing Place Recognition SLAMTo find the accurate global 6-DoF transform by feature matching approach, various end-to-end architectures have been proposed. However, existing methods have typically not considered the geometrical false correspondence of features, resulting in unnecessary features being involved in global place recognition and relocalization. In this paper, we introduce a robust correspondence estimation method by removing unnecessary features and highlighting necessary features simultaneously. To emphasize necessary features while disregarding unnecessary ones, we leverage the geometric correlation between two scenes represented in 3D LiDAR point clouds. nWe thus introduce the correspondence auxiliary loss that finds key correlations based on the point align algorithm, achieving end-to-end training of the proposed networks with robust correspondence estimation. Considering the ground with numerous plane patches can disrupt correspondence estimation by acting as an outlier, we propose a preprocessing step aimed at mitigating the influence of dominant plane patches from the perspective of addressing negative correspondences. Evaluation results on the dynamic urban driving dataset show that our proposed method can improve the performances of both global place recognition and relocalization tasks, highlighting the significance of estimating robust feature correspondence in these processes.